Attestation performance is arguably the most well-known performance metric for Ethereum validators. It is the "effectiveness" rating on beaconcha.in and is most frequently the reason for a profit or loss each epoch.

In v4.6.0, Lighthouse released a new mechanism for tracking the performance of a Beacon Node. This mechanism internally simulates and evaluates the production of an attestation every epoch, regardless of the presence of any local validators.

The attestation simulator was described in sigp/lighthouse#4526 and implemented by @v4lproik in sigp/lighthouse#4880.

Each simulated attestation is evaluated approximately 16 slots after it is produced to determine if it would have received head, target or source rewards. The results of this evaluation are exposed in the following Prometheus counter metrics:

validator_monitor_attestation_simulator_head_attester_hit_totalvalidator_monitor_attestation_simulator_head_attester_miss_totalvalidator_monitor_attestation_simulator_target_attester_hit_totalvalidator_monitor_attestation_simulator_target_attester_miss_totalvalidator_monitor_attestation_simulator_source_attester_hit_totalvalidator_monitor_attestation_simulator_source_attester_miss_total

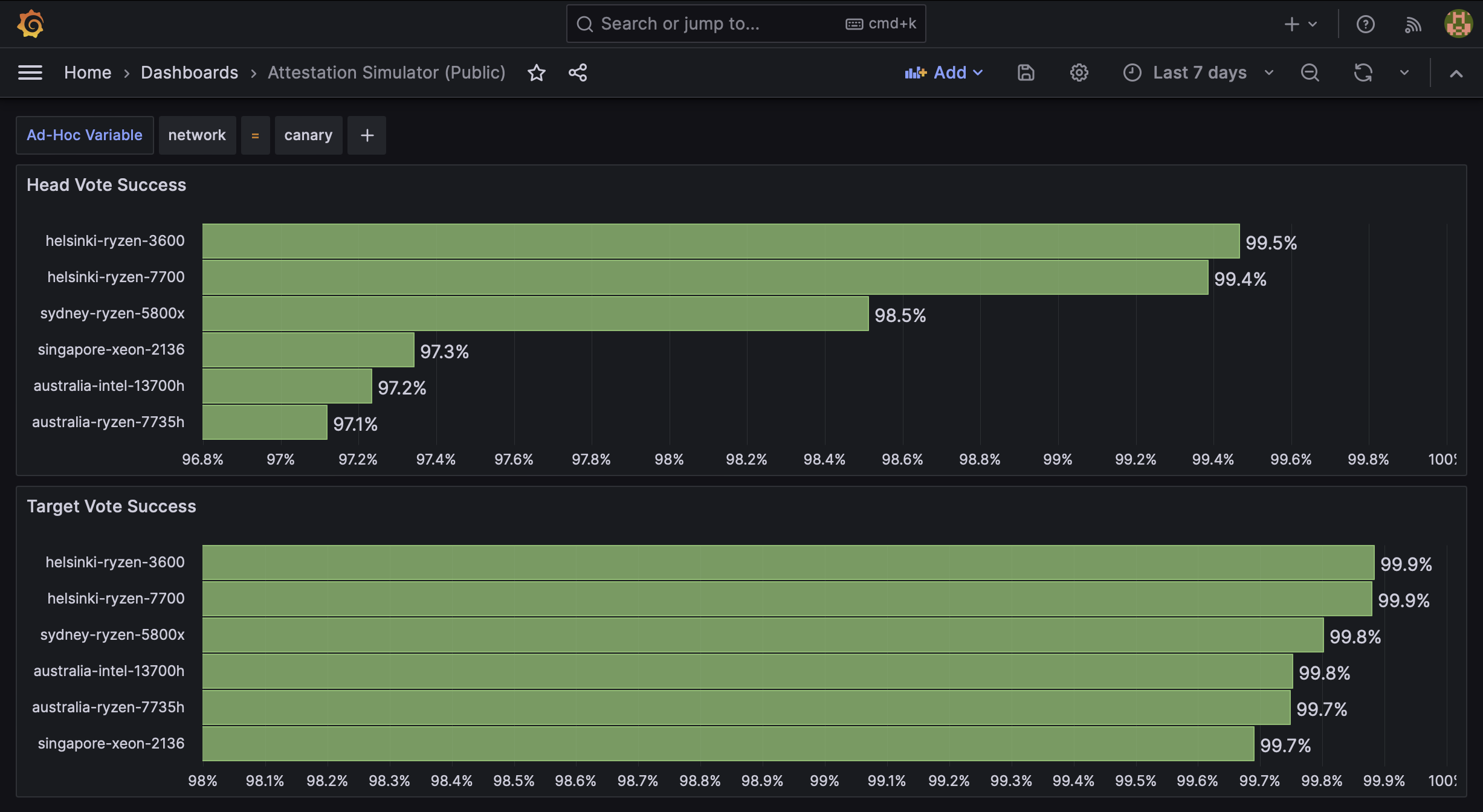

With these metrics, we can produce graphs which show the attestation performance for each node:

"Source Vote Success" has been omitted since it was all 100%.

These graphs are Grafana "bar charts" over the following Prometheus queries:

"Head Vote Success" Query

increase(validator_monitor_attestation_simulator_head_attester_hit_total[$__range]) /

(increase(validator_monitor_attestation_simulator_head_attester_hit_total[$__range]) +

increase(validator_monitor_attestation_simulator_head_attester_miss_total[$__range]))

"Target Vote Success" Query

increase(validator_monitor_attestation_simulator_target_attester_hit_total[$__range]) /

(increase(validator_monitor_attestation_simulator_target_attester_hit_total[$__range]) +

increase(validator_monitor_attestation_simulator_target_attester_miss_total[$__range]))

This dashboard can be downloaded at sigp/lighthouse-metrics.

Limitations

These metrics give a great view on the BN's ability to observe and import blocks, however there are limitations:

- Simulated attestations are never published, so they provide no insight into a node's ability to publish to the network.

- The Validator Client (VC) is not involved in attestation simulation, so these metrics include nothing about its performance or the latency of its connection to the BN.

Regardless of these limitations, attestation performance is often a measure of how expediently a BN can observe and import blocks. The attestation simulator simply and comprehensively covers both of these aspects.

Performance Factors

Where performance is related to block observation and import speeds, I've observed the following factors to have a significant impact:

- Geographic location: being further away from the validator who produced the block means the block will, on average, be received later. I've found this to be the most impactful factor in attestation performance.

- CPU: processing a block is CPU intensive and faster CPUs import blocks

quicker. In particular, I've observed that CPUs with SHA

extensions perform

significantly better. CPUs with SHA extensions are widely available (retail

and cloud) and I would consider it a must-have for all future builds. Look

for

SHA256 hardware acceleration: trueinlighthouse --version.

There are likely a myriad of other things which affect performance that I have not covered or investigated. RAM speed, for example, is something that I have not investigated and may have some material impact. Disk speed can also play a part, however Lighthouse works hard to avoid using the disk during block import so I suspect any NVMe drive is sufficient. There is much to learn about optimal staking hardware and I hope tools like the attestation simulator can enable this research.

As an exercise, we can compare the metrics from the earlier Grafana screenshot and see what we can learn about the factors that influence performance. The six example nodes are mainnet nodes that Sigma Prime uses for experimentation and testing. The table below shows the nodes from the Grafana screenshot with additional info about each node.

| Head Success % | Hostname | Location | Internet | CPU | SHA Extensions | EE |

|---|---|---|---|---|---|---|

| 99.5% | helsinki-ryzen-3600 |

Hetzner Datacenter in Helsinki | Fibre | 6c @ 3.6-4.2GHz, Launched 2019 | Yes | Nethermind |

| 99.4% | helsinki-ryzen-7700 |

Hetzner Datacenter in Helsinki | Fibre | 8c @ 3.8-5.3GHz, Launched 2023 | Yes | Besu |

| 98.5% | sydney-ryzen-5800x |

OVH Datacenter in Sydney | Fibre | 8c @ 3.8-4.7GHz, Launched 2020 | Yes | Geth |

| 97.3% | singapore-xeon-2136 |

OVH Datacenter in Singapore | Fibre | 6c @ 3.3-4.5GHz, Launched 2023 | No | Nethermind |

| 97.2% | australia-intel-13700h |

Residence in Regional Australia | 100/25Mbps Copper | 6c @ 5GHz + 8c @ 3.7Ghz, Launched 2023 | Yes | Nethermind |

| 97.1% | australia-ryzen-7735h |

Residence in Regional Australia | 100/25Mbps Copper | 8c @ 3.2-4.75GHz, Launched 2023 | Yes | Nethermind |

There's quite a lot of information here and the experiment uses a small sample size with poor controls for hardware, EE and location. However, I think there's still some information buried within.

Firstly, we can see that being in Europe gives an advantage over being in APAC. I suspect this is simply a matter of network latency; more validators are producing blocks in Europe therefore nodes in Europe see those blocks sooner.

Both the australia-intel-13700h and australia-ryzen-7735h are NUCs running

in a home setting (ASUS

PN64

and ASUS

PN53,

respectively). These NUCs are running 32GB of DDR5 4800 RAM and Samsung 980 Pro

2TB NVMes. They cost ~AU$1,400 each.

We can see the sydney-ryzen-5800xin a Sydney data-center is performing ~1.3%

better than the nodes at home. However, we can see that the

singapore-xeon-2136 in the Singapore data-center performs practically

identically to the nodes at home. The Singapore host costs ~AU$256 monthly,

which would buy a NUC in less than 6 months. From this we might decide that a

primary BN in a data-center plus a backup BN at home is appealing to the wallet

whilst helping with decentralization.

Both sydney-ryzen-5800x and singapore-xeon-2136 are running in OVH APAC

data-centers, however the Ryzen is performing better than the Xeon. I suspect

this is because the Xeon lacks SHA extensions. I have observed this several

times across our fleet; SHA extensions make a difference.

Both the NUCs are running at the same house. The Intel has consistently had a slight edge on the Ryzen. They're both running the same RAM and NVMe, so the Intel CPU appears to be performing marginally better.

Interestingly, the leading box helsinki-ryzen-3600 has an edge on

helsinki-ryzen-7700, even though the former has a significantly less powerful

CPU and slower NVMe drive. However, when we look at different time-spans we can

see that these nodes oscillate between first and second place. This leads me to

believe that the hardware difference between the two is marginal. This suggests

that getting a good deal on hardware from a few years ago might be more cost

effective than buying the bleeding edge (as long as you're getting SHA

extensions in both cases). Alternatively, it could be that the Nethermind/Besu

difference between them is telling us something, further research would be

required to make a firm decision.

Finally, here's the obligatory don't use Hetzner for production comment.

Summary

The attestation simulator shines a light on factors that influence attestation performance. It's a valuable tool for node operators small and large. From a rough analysis of a small dataset, we add weight to the widely held belief that running in APAC impacts performance. That performance impact can be mitigated through better hardware, but splashing big on new hardware may not be economically ideal.

In light of all this, I'd like to take a moment to consider priorities. Personal profit maximization can be a priority, but history is full of cautionary tales. When it comes to "the greater good" for validators, the Ethereum network has over 99% attestation performance on average and we only really need 66.7%. In other words, there's no shortage of high-performing validators.

Ethereum does, however have a shortage of validators outside of Europe and North America. It also has a shortage of validators running outside of a few big cloud providers. Tools like monitoreth.io show over 35% of nodes running in the cloud, but it's very likely that well over half of all validators are running from a handful of cloud providers.

At Sigma Prime we run all our production validators in APAC. We're an Australian company, so it's convenient for us. Futhermore, we're in the final stages of setting up our own self-hosted infrastructure at a data-center in Sydney. That data-center will ultimately cost us more than just renting cloud boxes, but we want to distinguish ourselves and sleep well knowing we're decent Ethereans. That's how we've set our priorities.

When designing or upgrading your staking setup, consider what you can do to help the Ethereum network. If you're outside of the US/EU, consider running your nodes locally. If you want to run in the US or EU, consider introducing some nodes to the home or office. Use the attestation simulator to figure out the real economic cost and make an informed decision. The cost of being different might be less than you think.